Exceptions All The Way Down

What really is an exception, as you drill down to it? This is a question that left me wondering one day.

Of course, if you've been coding for a while, you already know what an exception is at the surface level. As the name suggests, it is an "exception to the rule", a case we are not prepared to process normally, and therefore we must either handle it explicitly in a try-catch construct, or let it propagate (or sometimes, both).

But that only shifts the question one layer deeper into the stack. Why can we not process that case normally? And what exactly would happen if we just let it run? We know what happens at the top of the stack; the programming language does something internally that stops the OS (Operating System) process associated with it. It exits the program, usually accompanied by a message about the line of code where the exception happened, and other helpful details about the surrounding context. But it often doesn't tell us where the problem truly originates and what exactly failed. Did the compilation fail? Did the CPU crash while executing the binaries? Or was it something else entirely?

The answer to some of those questions will, of course, largely depend on the type of exception at hand. Let's follow the interesting journey of Python's ZeroDivisionError exception as an example. But first a bit of background knowledge.

As we all know, division by zero is not allowed in mathematics. Perhaps the simplest explanation to why that's the case comes from the inverse operation of multiplication: there is no non-zero number that when multiplied with zero, gives a non-zero number as the product. In short, x/0 is not allowed because there is no y such that

y * 0 = x, as long as x is not 0. And 0 itself doesn't make sense to be divided, because there's nothing to divide. Now I'm sure mathematicians can make this discourse last for days and add layer after layer of debate to it, but for the rest of us, we simply accept it as a fact of the domain at hand.

But computers don't just know that by default. What does a CPU know about mathematical limitations? It's just a piece of silicon at the end of the day. To be a useful computing machine though, it must adhere to the basic principles. As such, computers too don't allow you to divide by zero. Since the metal is by default naive to the rules, as we've established, the check to prohibit (or recover from) division by zero must be coded somewhere and somehow in the stack, right? Let's find out.

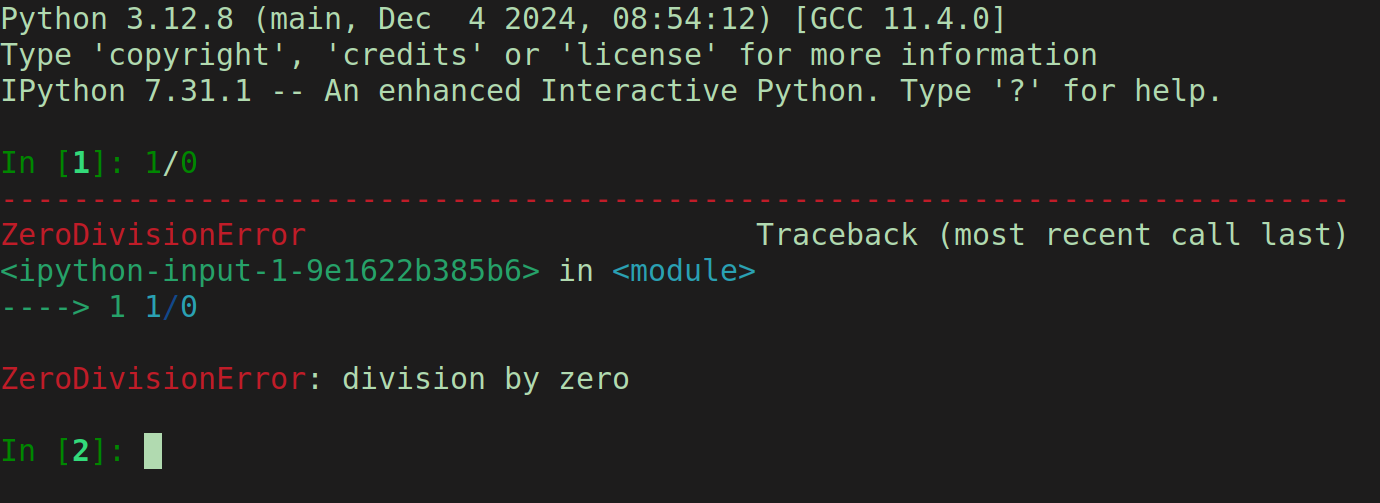

I've opened an interactive Python REPL (with IPython) and executed the operation 1/0. The result is as shown below:

Alright, that's aligned with what we've said so far. And in fact, IPython goes a step further and handles the exception for us. The shell moves to the next prompt, and the process is still running. If that was a pure Python module being run directly, the execution would've stopped at that line, and the process would've exited.

But where did that come from? Was it Python' math module? The C code in CPython's interpreter? The machine code? Here's what happens specifically at this level:

- the Python bytecode for the division (BINARY_DIVIDE) triggers a corresponding function in the CPython runtime

- the CPython runtime checks if the divisor (0 in this case) is valid

- since the divisor is 0 and thus invalid, CPython raises the ZeroDivisionError exception using its error-handling mechanisms

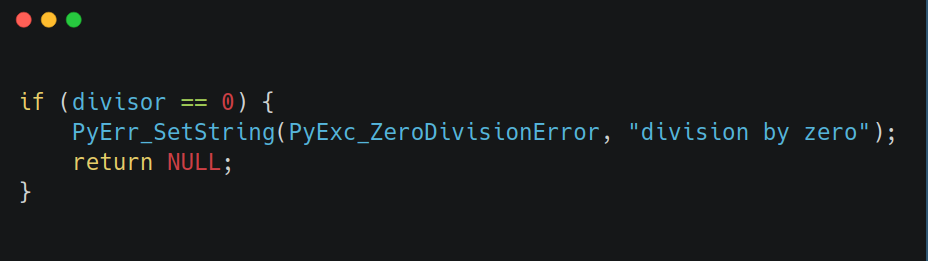

If you head to the implementation, you'll find something like this:

So far, we know it's the Python interpreter (the C code) that raises the exception before the actual division operation is attempted. This exception bubbles up to the Python code in our program, where it can be caught and handled, or left untreated to crash. That's probably just a good language design on Python's side to fail early and propagate. But what if the Python interpreter did nothing to take care of that, and simply passed the problem down to C? How does C deal with it?

Well, from what I could find, turns out that C the language (not to be confused with C applications like CPython) doesn't specifically guard against the division by zero. So there's no check coded into the language itself, to prohibit that. In most cases, division by zero in C is left unchecked until it reaches the hardware level. That is not that surprising, given C is a low-level language designed for maximum performance and minimal overhead, leaving much of the responsibility to the programmer. If you want such runtime checks in C, you must explicitly implement them yourself.

What is interesting, is that the generated Assembly code will treat integer and floating-point division by zero, differently. So, something like int x = 1 / 0; leads to a hardware exception, but 1.0 / 0.0 executes normally, evaluating to Infinity. It's unsigned infinity by default but can be negative infinity if the numerator is negative, or NaN if the numerator is 0.0.

C peculiarities aside, back on track: is the error ultimately thrown by software or hardware? If checks are implemented, such as in the case of CPython, then the error is clearly thrown by the software. But If CPython allowed the hardware to perform the division, it would result in a CPU exception or fault, and Python itself is too high-level of a language to bother with handling CPU faults. We instead throw a software exception (higher up the stack, and usually easier to handle or recover from) right away to avoid the hardware exception that would ensue if we didn't.

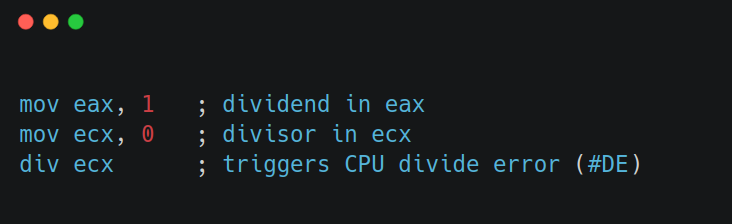

As we said, If the division was directly attempted in machine code, meaning no software checks existed, the CPU would trigger a hardware-level exception. On x86 architectures, a division by zero would cause a divide error interrupt (#DE). This interrupt would crash the program if not handled properly by the OS. The OS must decide what to do with it. And on bare-metal embedded systems (where there is no OS at all), the program would probably simply crash.

Now we know that the hardware (CPU) would raise an exception if asked to perform the operation, but it's still a bit too abstract to understand where the problem really originates, so let's dive further. The CPU’s Arithmetic Logic Unit (ALU) is the component that tries to perform the division. Since division by zero is undefined, it cannot compute a result. Instead, it triggers a hardware-level exception (sometimes called a "trap" or "fault"). So the failure happens inside the CPU, specifically in the execution pipeline where the arithmetic operation occurs.

It's important to remember that the CPU is not separate from its interrupt-handling mechanisms. Interrupts are an intrinsic part of how the CPU handles exceptional cases, like invalid instructions or arithmetic errors. Without the interrupt system, the CPU would execute the division and produce invalid results, potentially corrupting the program state.

Here's how this would look like in Assembly:

The division by zero never completes; instead, the hardware interrupts the program's flow. So in this case it is a hardware exception. But what does that mean on its own?

A hardware exception is nothing more than a low-level mechanism for signaling errors or special conditions to the OS or program. The CPU generates a trap or interrupt, which is essentially a jump to a predefined piece of code (an interrupt handler) in response to the error. This interrupt is identified by a unique number. On x86 CPUs, as we said, division by zero triggers interrupt #DE (Divide Error). The interrupt tells the OS what type of error occurred.

One important aspect is that before handling the exception, the CPU saves its current state (i.e. registers, program counter) so the OS can inspect what caused the issue.

Once the hardware exception is raised, the CPU triggers the interrupt handler provided by the OS. For example, the Linux kernel has handlers for hardware interrupts, including divide errors.

The OS then decides what to do with it. For user programs, it usually terminates the program and generates a core dump. For kernel-level operations, it might log the error or attempt recovery. If the program is configured to handle hardware signals, the OS can notify the program instead of killing it.

The ALU is a hardware component and operates independently of software for basic arithmetic and logical operations. The hardware exception would occur either way. What depends on the type of software, is how that exception is handled or recovered from. But let's dive one step further. If the ALU is a purely hardware component, how does it know that division by zero is not defined? How does it know what is defined and what isn't? Silicon doesn't just know stuff. Well, the ALU is built using logic gates (combinations of transistors) configured to perform arithmetic and logical operations. These gates are hardwired to implement specific behaviors, including handling invalid operations. The ALU doesn’t "know" mathematics in the abstract sense; it simply operates based on hardwired rules encoded in its circuit design. If the divisor is zero, the ALU raises a control signal indicating an error. This "check" is not software but rather hardware logic, implemented directly in silicon. It’s similar to a physical constraint, much like how a gear system is designed to prevent certain movements.

While the ALU itself is hardware, the CPU as a whole involves microcode in many modern designs. This is a low-level layer of firmware that translates complex instructions (like div?) into simpler hardware operations. Some CPU instructions are directly executed by the ALU without microcode involvement (i.e. addition or logical AND), whereas more complex instructions, like division, may involve microcode. And the microcode might include a check for it. But microcode or not, the error detection is intrinsic to the CPU's design, and not offloaded to whatever external software you run on it. So even CPUs with microcode implement the division by zero check in the hardware itself because this is more efficient and reliable. If the microcode were missing or incorrect, the hardware (ALU) would still detect and signal the error due to the hardwired logic.

Now, to the real bottom of it: what if there were no hardware checks anywhere either? Well, as you can imagine by now, the CPU would attempt to execute the division anyway. Without safeguards, the ALU would produce invalid outputs and thus generate garbage results and/or overflow the registers. A computer would not be a computer anymore, not a reliable one at least! There's a reason those checks are always in place and implemented so deep into the stack.

So that's the journey of an exception, from the top of the stack, down to the transistors. The worlds of software and hardware intertwine in a powerful and fascinating way, to make computers more robust and reliable. And what seems like a foreign part of the stack, or something high-level programmers will never have to worry about, is sometimes just a few abstraction layers away and not that difficult to grasp.